Rancher

Guest Cluster Log Collection

You can collect guest cluster logs and configuration files. Perform the following steps on each guest cluster node:

-

Log in to the node.

-

Download the Rancher v2.x Linux log collector script and generate a log bundle using the following commands:

curl -OLs https://raw.githubusercontent.com/rancherlabs/support-tools/master/collection/rancher/v2.x/logs-collector/rancher2_logs_collector.sh

sudo bash rancher2_logs_collector.shThe output of the script indicates the location of the generated tarball.

For more information, see The Rancher v2.x Linux log collector script.

Importing of Harvester Clusters into Rancher

After the cluster-registration-url is set on Harvester, a deployment named cattle-system/cattle-cluster-agent is created for importing of the Harvester cluster into Rancher.

Import Pending Due to unable to read CA file Error

The following error messages in the cattle-cluster-agent-* pod logs indicate that the Harvester cluster cannot be imported into Rancher.

2025-02-13T17:25:22.520593546Z time="2025-02-13T17:25:22Z" level=info msg="Rancher agent version v2.10.2 is starting"

2025-02-13T17:25:22.529886868Z time="2025-02-13T17:25:22Z" level=error msg="unable to read CA file from /etc/kubernetes/ssl/certs/serverca: open /etc/kubernetes/ssl/certs/serverca: no such file or directory"

2025-02-13T17:25:22.529924542Z time="2025-02-13T17:25:22Z" level=error msg="Strict CA verification is enabled but encountered error finding root CA"

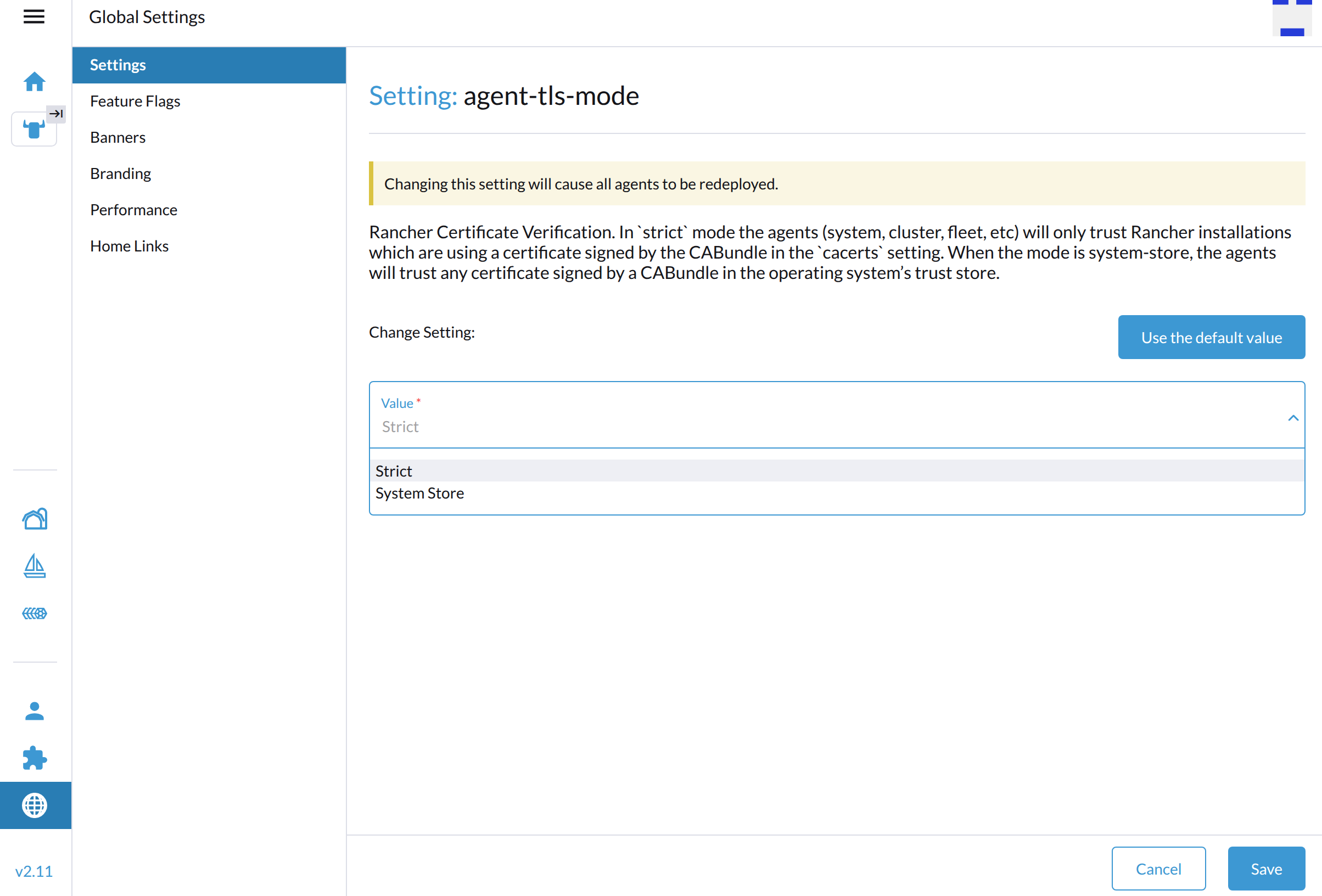

The root cause is that the agent-tls-mode is not effectively configured.

Rancher's agent-tls-mode setting controls how Rancher's agents (cluster-agent, fleet-agent, and system-agent) validate Rancher's certificate when establishing a connection. You can set either of the following values:

-

strict: Rancher's agents only trust certificates generated by the Certificate Authority (CA) specified in thecacertssetting. This is the recommended default TLS setting that guarantees a higher level of security.The

strictoption enables a higher level of security, it requires Rancher to have access to the CA which generated the certificate visible to the agents. In the case of certain certificate configurations (notably, external certificates), this is not automatic, and extra configuration is needed. See the installation guide for more information on which scenarios require extra configuration. -

system-store: Rancher's agents trust any certificate generated by a public Certificate Authority specified in the operating system's trust store. Use this setting if your setup uses an external trust authority and you don't have ownership over the Certificate Authority.importantUsing the

system-storesetting implies that the agent trusts all external authorities found in the operating system's trust store including those outside of the user's control.

The default value of this setting depends on the Rancher version and installation type. For more information, see Rancher issue #45628.

| Type | Versions | Default Value |

|---|---|---|

| New installation | v2.8 | system-store |

| New installation | v2.9 and later | strict |

| Upgrade | v2.8 to v2.9 | system-store |

Follow the steps below to configure the different TLS setting options.

-

Log in to the Rancher UI.

-

Go to Global Settings > Settings.

-

Select agent-tls-mode, and then select ⋮ > Edit Setting to access the configuration options.

-

Set Value to System Store or Strict.

-

Click Save.

Related issues:

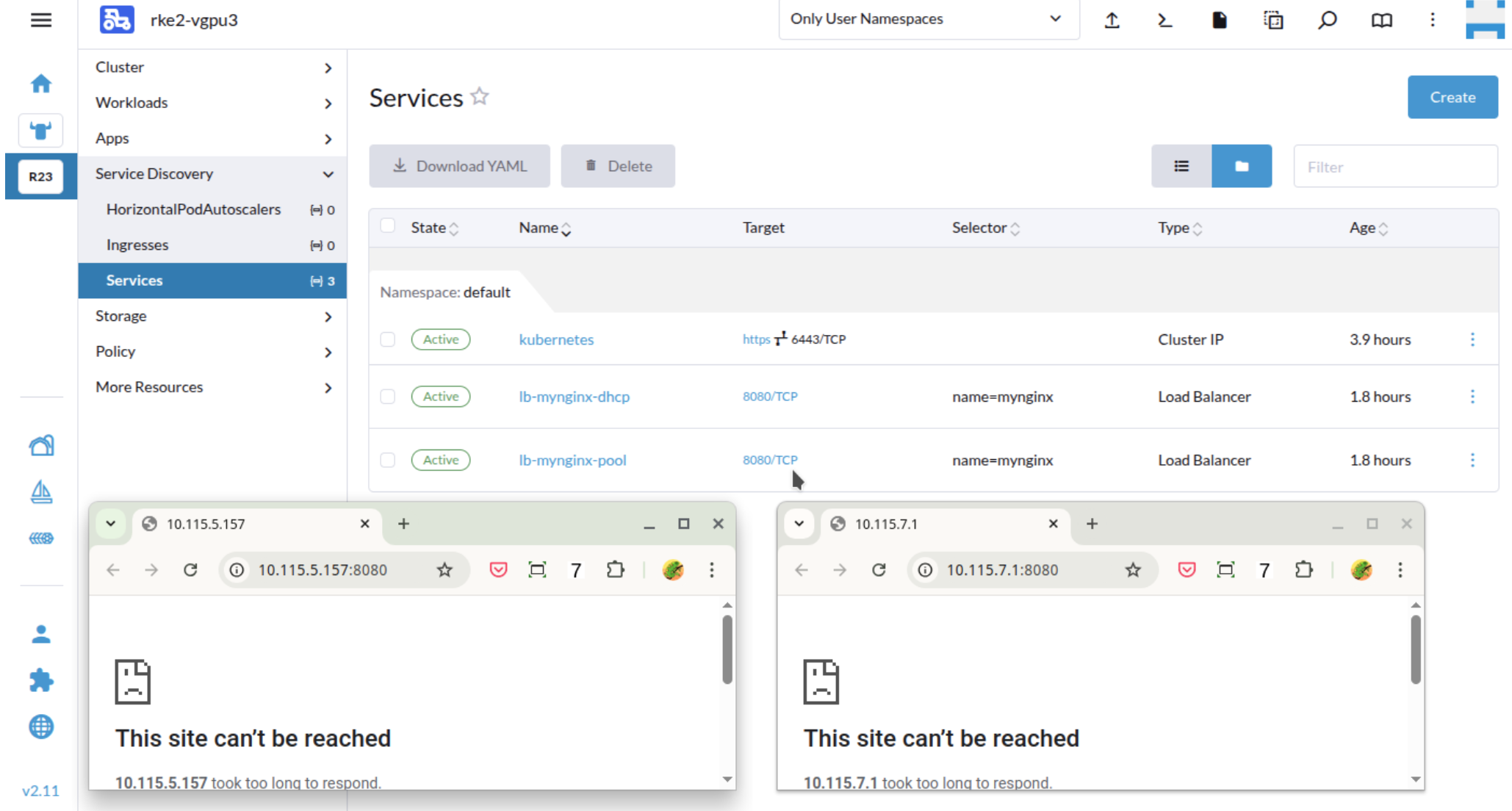

Guest Cluster Loadbalancer IP is not reachable

Issue Description

-

Create a new guest cluster with the default

Container Network: Calicoand the defaultCloud Provider: Harvester. -

Deploy

nginxon this new guest cluster via commandkubectl apply -f https://k8s.io/examples/application/deployment.yaml. -

Create a Load Balancer, which selects backend nginx.

-

The service is ready with allocated IP from DHCP server or IPPool, but when clicking the link the page might fail to show.

Root Cause

In below example, the guest cluster node(Harvester VM)'s IP is 10.115.1.46, and later a new Loadbalancer IP 10.115.6.200 is added to a new interface like vip-fd8c28ce (@enp1s0). However, the Loadbalancer IP is taken over by the calio controller. It caused the Loadbalancer IP is not reachable. Through a shell session using the original IP run the following.

$ ip -d link show dev vxlan.calico

44: vxlan.calico: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 66:a7:41:00:1d:ba brd ff:ff:ff:ff:ff:ff promiscuity 0 allmulti 0 minmtu 68 maxmtu 65535

info: Using default fan map value (33)

vxlan id 4096 local 10.115.6.200 dev vip-8a928fa0 srcport 0 0 dstport 4789 nolearning ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535 tso_max_size 65536 tso_max_segs 65535 gro_max_size 65536

The IP 10.115.6.200 is from the vip-* interface.

Affected versions

From Calico v3.22 or even ealier version, the IP autodetection was available, and the first-found was the default value.

SUSE RKE2 version v1.29 has Calico v3.29.2. version v1.35 has Calico v3.31.2.

It means: for most recent RKE2 clusters when they use Calico as the default CNI, and use Harvester-cloud-provider to offer loadbalancer type services, they might suffer this issue.

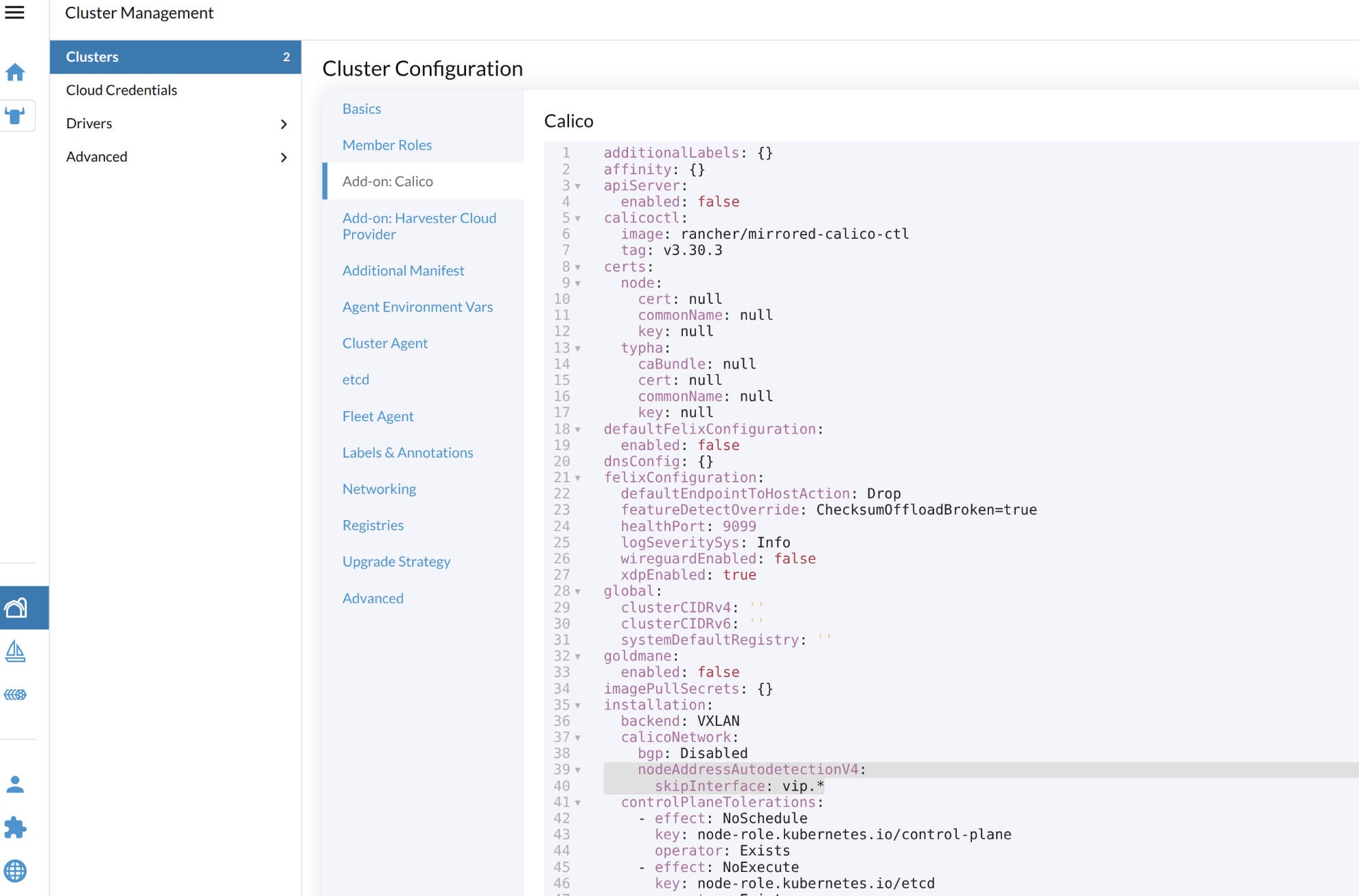

Workaround

For newly created cluster

When creating new clusters on Rancher Manager, click Add-on: Calico, YAML configuration window will appear. Add following two lines to .installation.calicoNetwork.

installation:

backend: VXLAN

calicoNetwork:

bgp: Disabled

nodeAddressAutodetectionV4: // add this line

skipInterface: vip.* // add this line

The calico controller won't take over the Loadbalancer IP accidentally.

For existing clusters

Run kubectl command $ kubectl edit installation, go to section .spec.calicoNetwork.nodeAddressAutodetectionV4, remove any existing line like firstFound: true, add new line skipInterface: vip.* and save.

Wait 2 minutes, the daemonset calico-system/calico-node is rolling updated and then the related PODs take the node IP for VXLAN to use.

Run following command to check the vxlan.calico interface, if it takes the node IP like 10.115.1.46, not the VIP.

$ ip -d link show dev vxlan.calico

45: vxlan.calico: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 66:a7:41:00:1d:ba brd ff:ff:ff:ff:ff:ff promiscuity 0 allmulti 0 minmtu 68 maxmtu 65535

info: Using default fan map value (33)

vxlan id 4096 local 10.115.1.46 dev enp1s0 srcport 0 0 dstport 4789 nolearning ttl auto ageing 300 udpcsum noudp6zerocsumtx noudp6zerocsumrx addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535 tso_max_size 65536 tso_max_segs 65535 gro_max_size 65536

If it still uses the VIP, then check the tigera-operator pod log to see if there is key word failed calling webhook.

$ kubectl -n tigera-operator logs tigera-operator-8566d6db5c-wfjkt

...

{"level":"error","ts":"2025-12-18T09:06:37Z","msg":"Reconciler error","controller":"tigera-installation-controller","object":{"name":"periodic-5m0s-reconcile-event"},"namespace":"","name":"periodic-5m0s-reconcile-event","reconcileID":"bae9d2da-a4bf-4d8b-89b8-c8a23a96f351","error":"Internal error occurred: failed calling webhook \"rancher.cattle.io.namespaces\": failed to call webhook: Post \"https://rancher-webhook.cattle-system.svc:443/v1/webhook/validation/namespaces?timeout=10s\": context deadline exceeded"...}

In case it happenes, then update the calico-system/calico-node daemonset to add following container parameters directly.

- name: IP_AUTODETECTION_METHOD

value: skip-interface=vip.*

Wait 2 minutes and check the aforementioned vxlan.calico interface again, when VIP is not taken over by it, VIP will continue to be reachable.