PCI Devices

Available as of v1.1.0

A PCIDevice in Harvester represents a host device with a PCI address.

The devices can be passed through the hypervisor to a VM by creating a PCIDeviceClaim resource,

or by using the UI to enable passthrough. Passing a device through the hypervisor means that

the VM can directly access the device, and effectively owns the device. A VM can even install

its own drivers for that device.

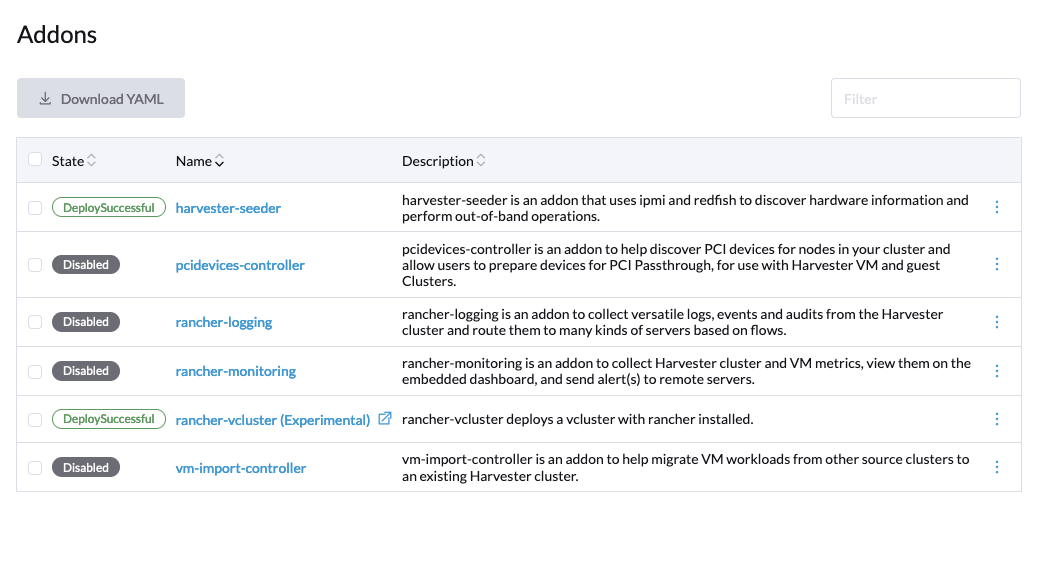

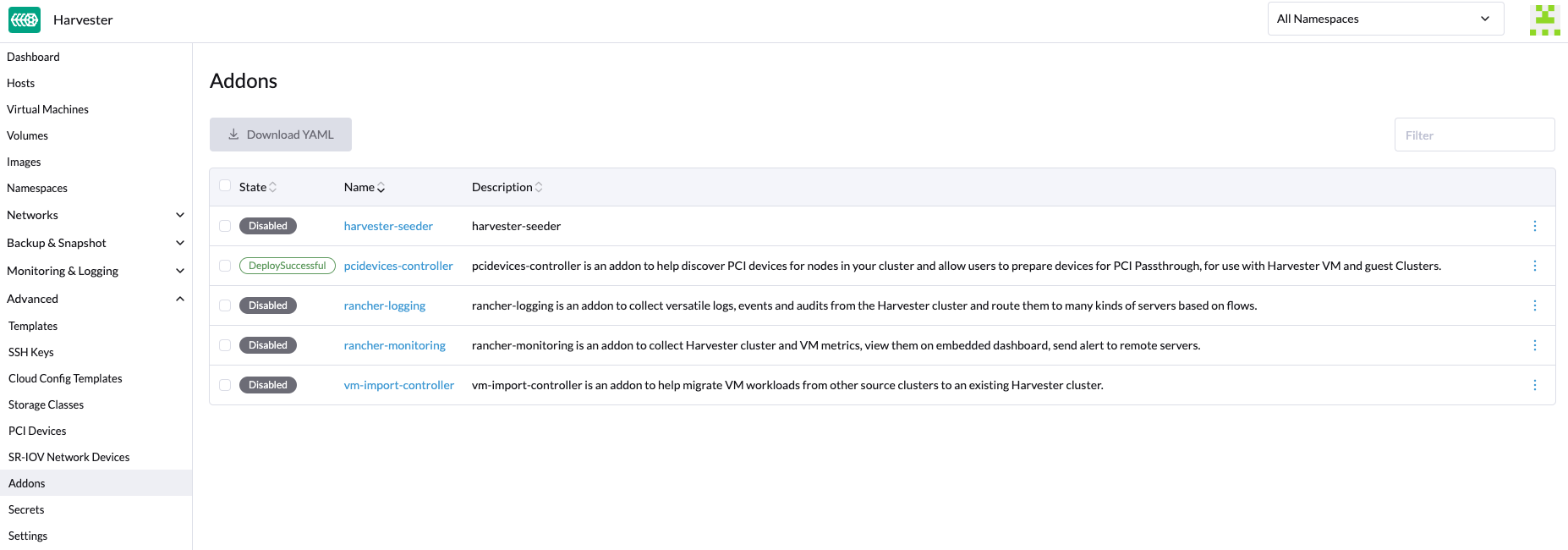

This is accomplished by using the pcidevices-controller addon.

To use the PCI devices feature, users need to enable the pcidevices-controller addon first.

Once the pcidevices-controller addon is deployed successfully, it can take a few minutes for it to scan and the PCIDevice CRDs to become available.

Enabling Passthrough on a PCI Device

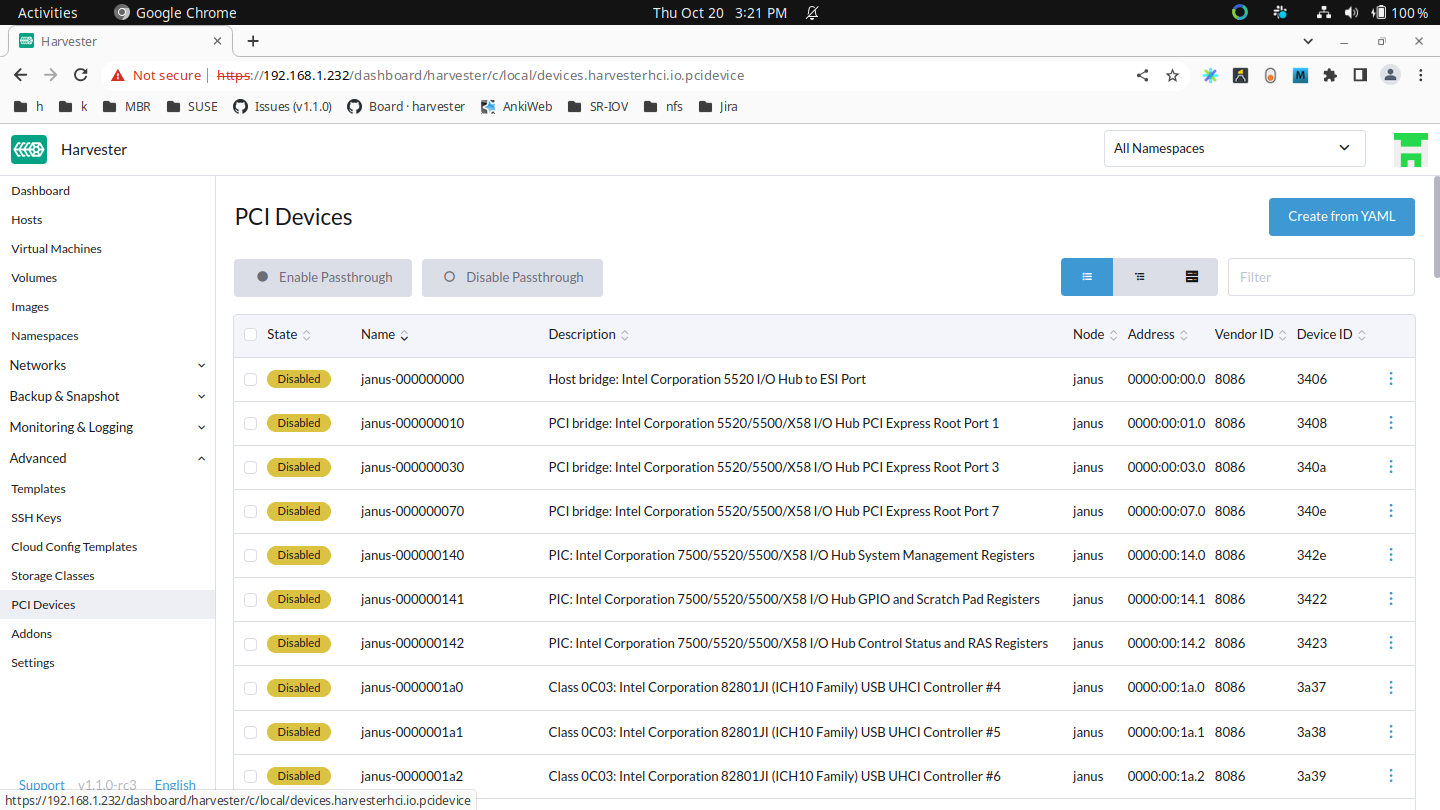

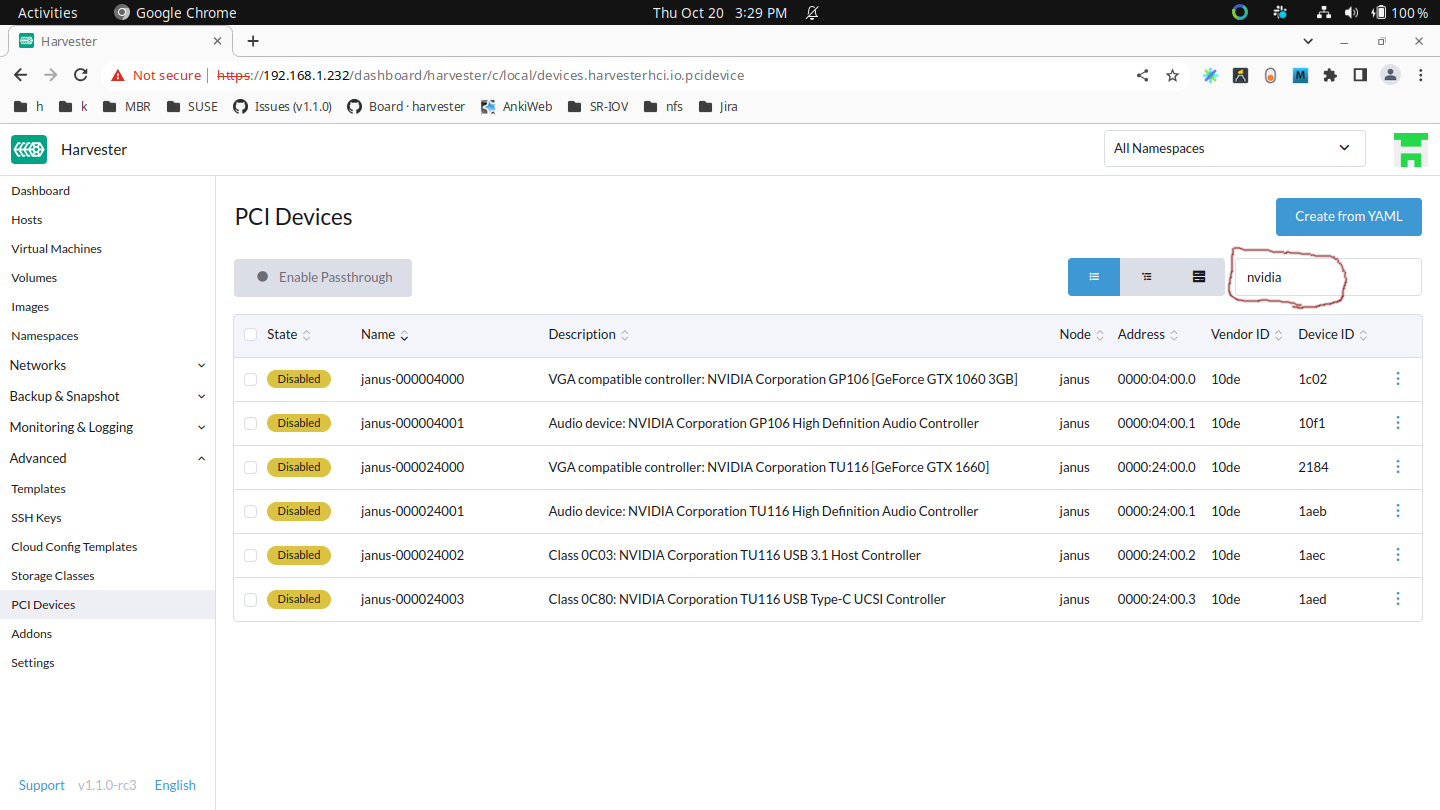

Now go to the

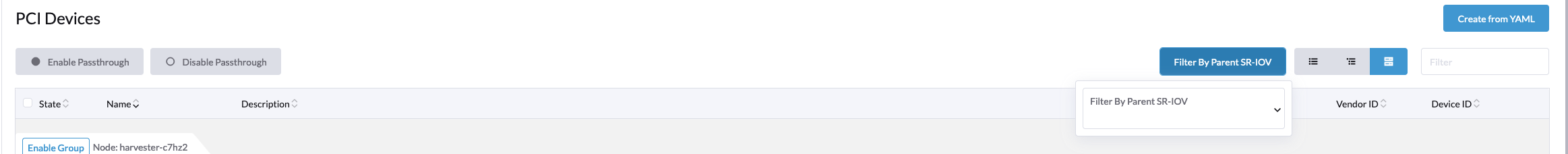

Advanced -> PCI Devicespage:

Search for your device by vendor name (e.g. NVIDIA, Intel, etc.) or device name.

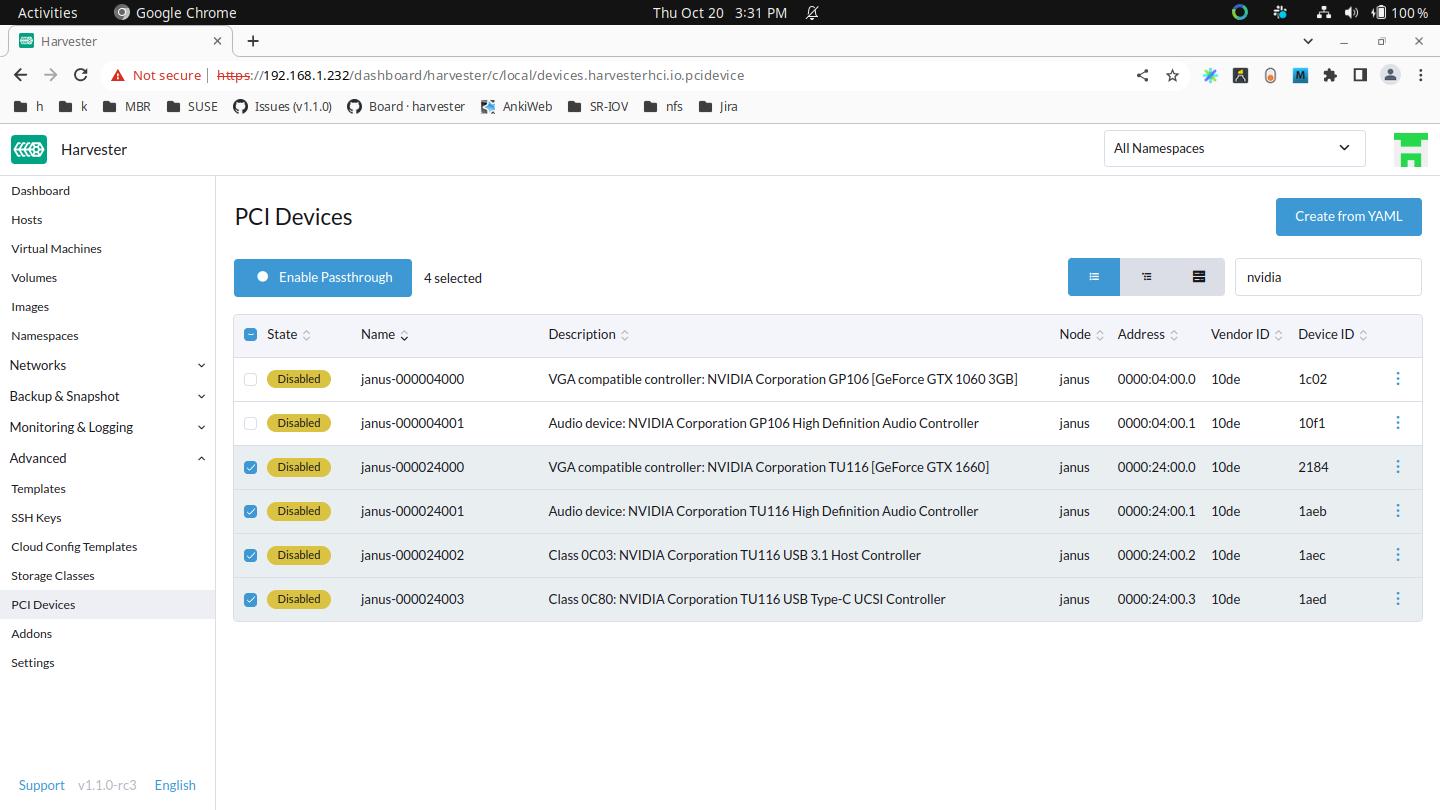

Select the devices you want to enable for passthrough:

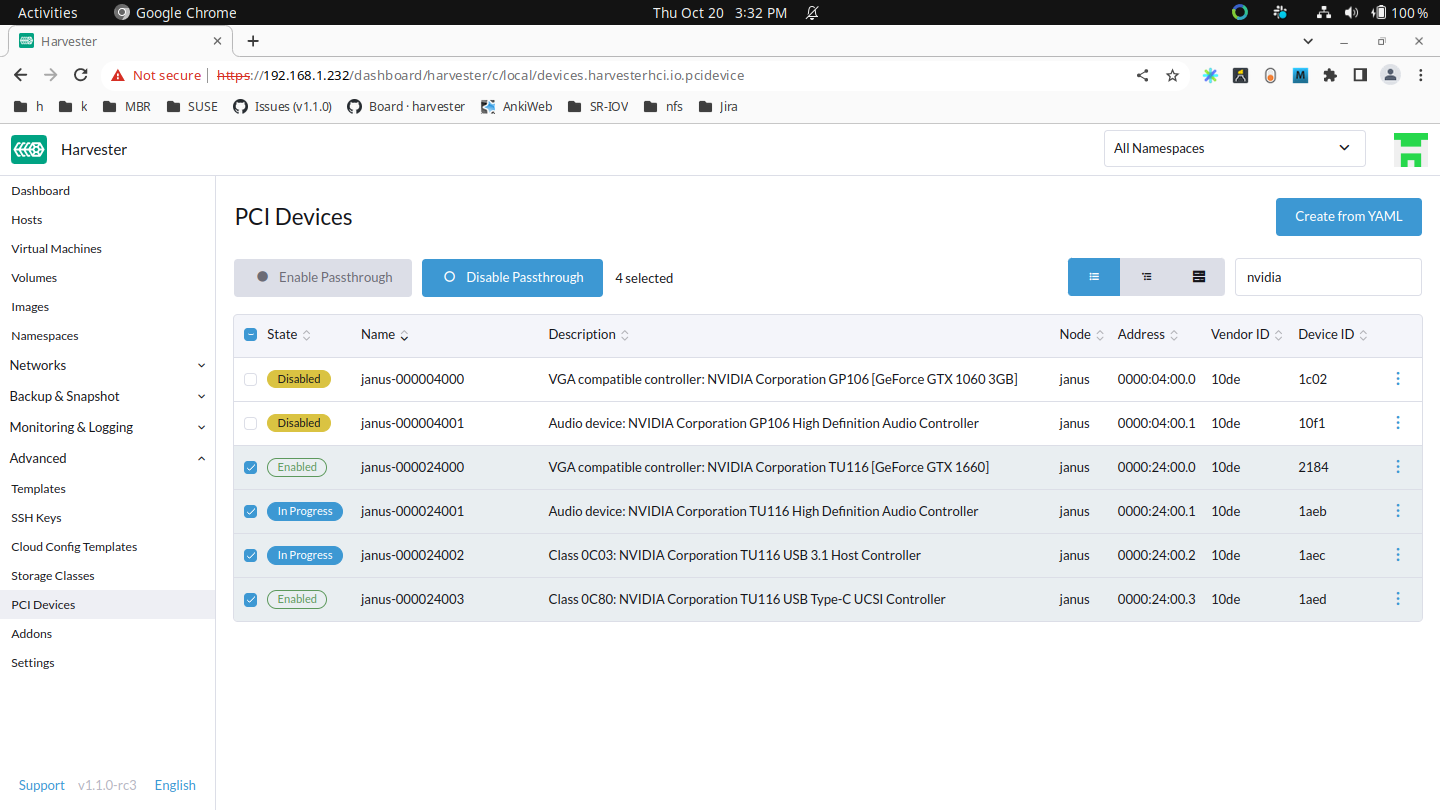

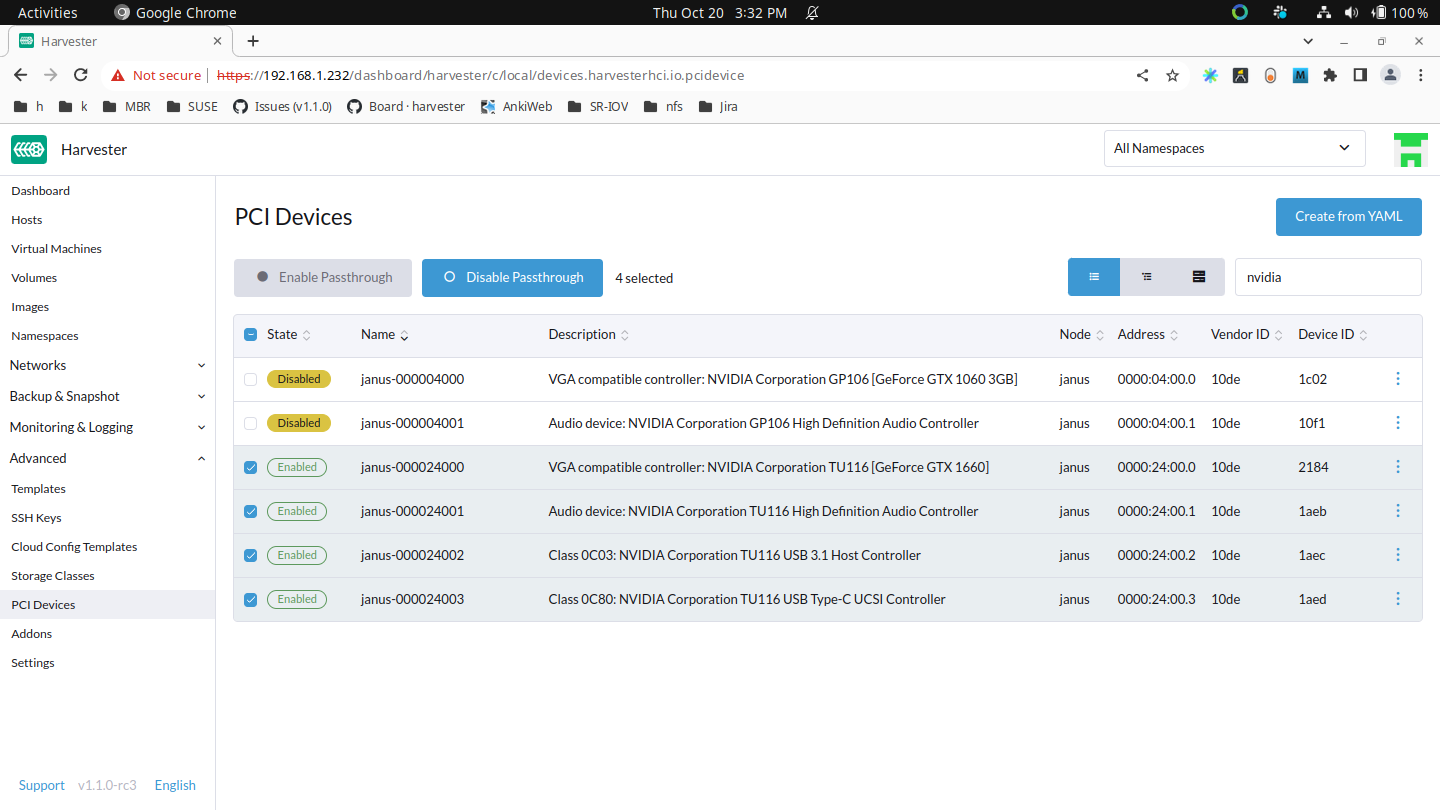

Then click Enable Passthrough and read the warning message. If you still want to enable these devices, click Enable and wait for all devices to be

Enabled.cautionPlease do not use

host-ownedPCI devices (e.g., management and VLAN NICs). Incorrect device allocation may cause damage to your cluster, including node failure.

Attaching PCI Devices to a VM

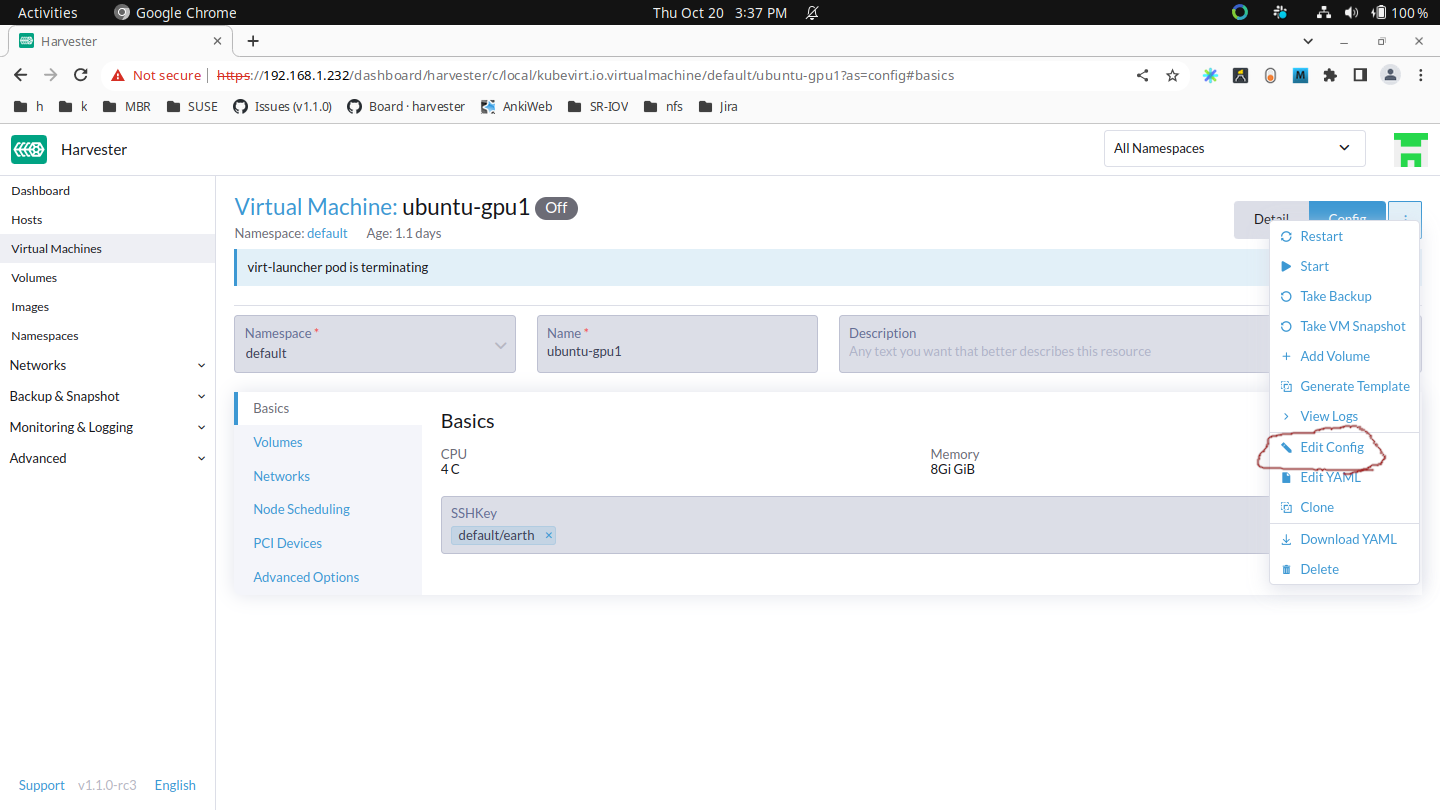

After enabling these PCI devices, you can navigate to the Virtual Machines page and select Edit Config to pass these devices.

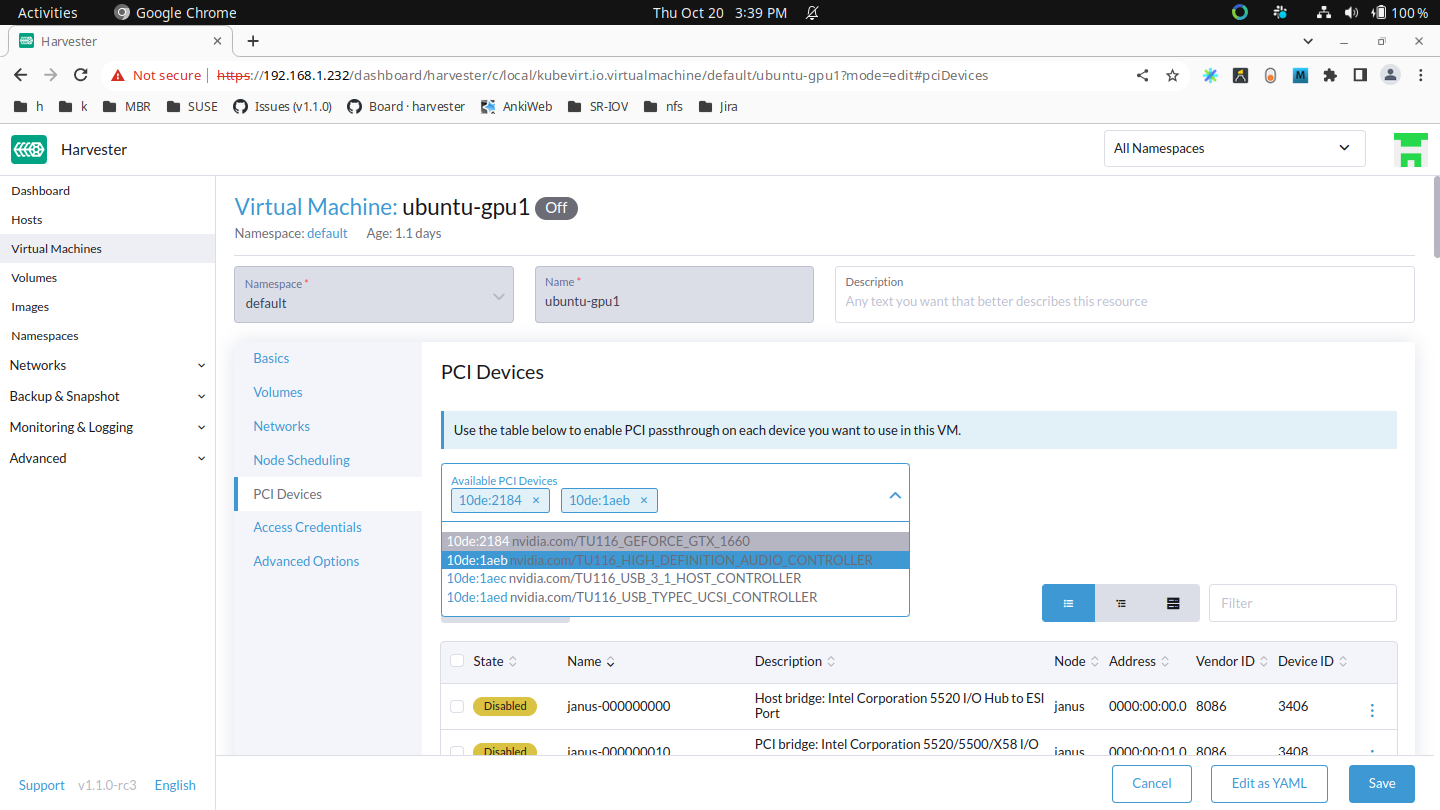

Select PCI Devices and use the Available PCI Devices drop-down. Select the devices you want to attach from the list displayed and then click Save.

Using a passed-through PCI Device inside the VM

Boot the VM up, and run lspci inside the VM, the attached PCI devices will show up, although the PCI address in the VM won't necessarily match the PCI address in the host.

Installing drivers for your PCI device inside the VM

This is just like installing drivers in the host. The PCI passthrough feature will bind the host device to the vfio-pci driver, which gives VMs the ability to use their own drivers.

Known Issues

- Issue #6648: A virtual machine can be scheduled on an incorrect node if the cluster has multiple instances of the same PCI device.

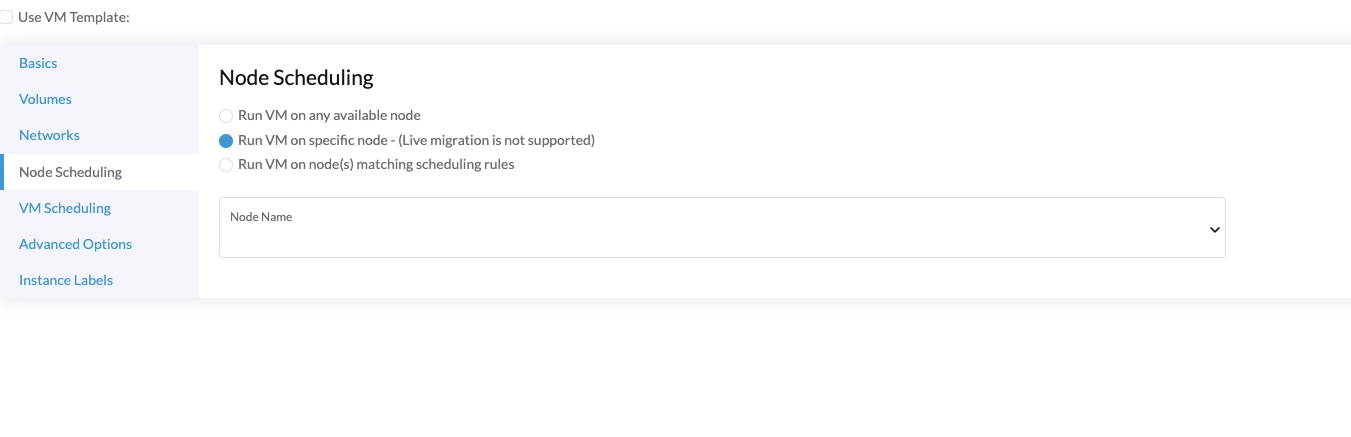

The pcidevices-controller add-on currently uses unique resource descriptors to publish devices to the kubelet. If multiple PCIDeviceClaims of the same device type exist within the cluster, the same unique resource descriptor is used for these PCIDeviceClaims, and so the virtual machine may be scheduled on an incorrect node. To ensure that the correct device and node are used, select Run VM on specific node when configuring Node Scheduling settings.

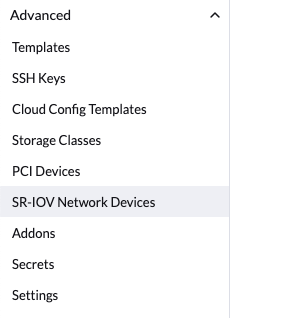

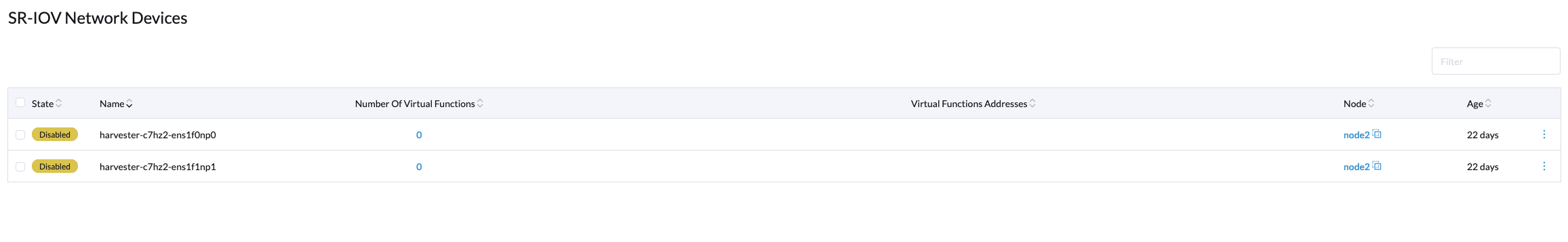

SRIOV Network Devices

Available as of v1.2.0

The pcidevices-controller addon can now scan network interfaces on the underlying hosts and check if they support SRIOV Virtual Functions (VFs). If a valid device is found, pcidevices-controller will generate a new SRIOVNetworkDevice object.

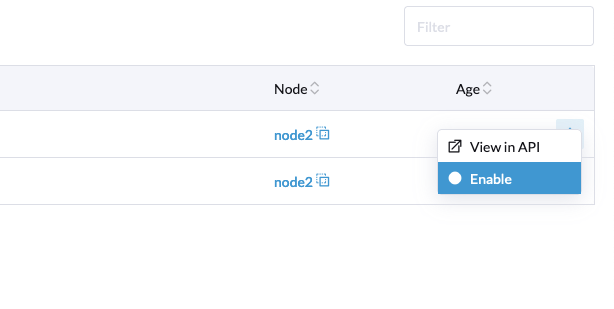

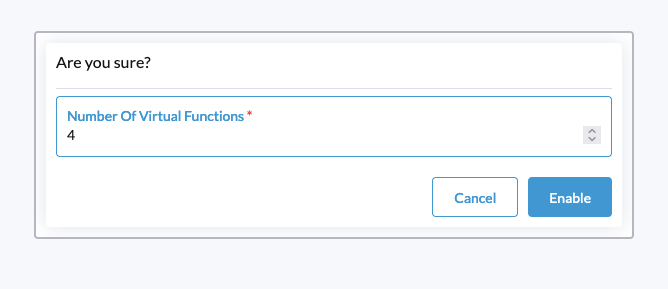

To create VFs on a SriovNetworkDevice, you can click ⋮ > Enable and then define the Number of Virtual Functions.

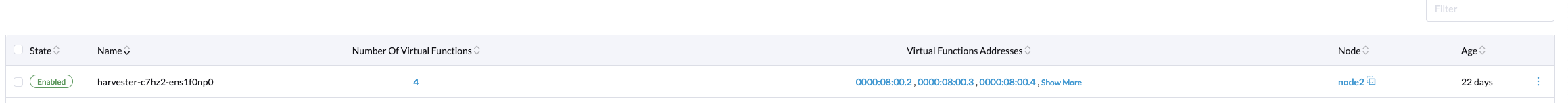

The pcidevices-controller will define the VFs on the network interface and report the new PCI device status for the newly created VFs.

On the next re-scan, the pcidevices-controller will create the PCIDevices for VFs. This can take up to 1 minute.

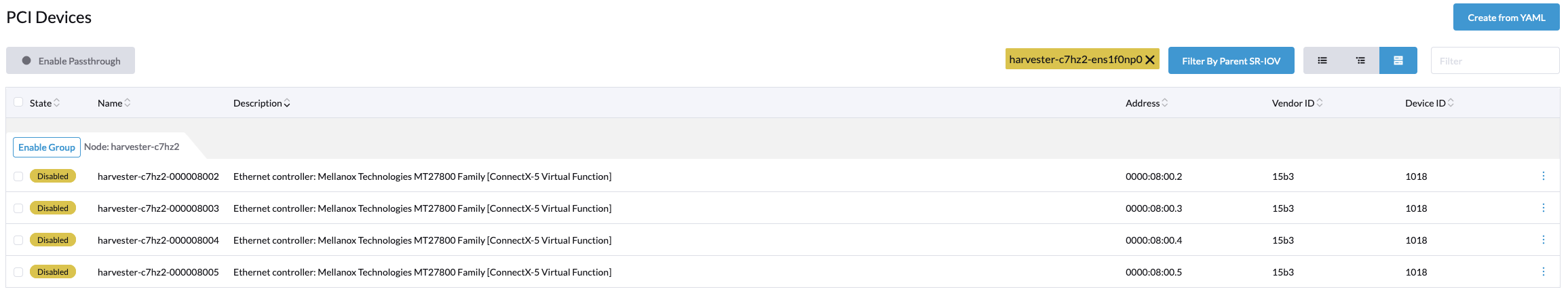

You can now navigate to the PCI Devices page to view the new devices.

We have also introduced a new filter to help you filter PCI devices by the underlying network interface.

The newly created PCI device can be passed through to virtual machines like any other PCI device.