Live Migration

Live migration means moving a virtual machine to a different host without downtime. A couple of comprehensive processes and tasks are done under the hood to fulfill the live migration.

Prerequisites

Live migration can occur when the following requirements are met:

-

The cluster has at least one schedulable node (in addition to the current node) that matches all of the virtual machine's scheduling rules.

-

The migration target node has enough available resources to host the virtual machine.

-

The CPU, memory, volumes, devices and other resources requested by the virtual machine can be copied or rebuilt on the migration target node while the source virtual machine is still running.

Non-Migratable Virtual Machines

A virtual machine is considered non-migratable if it has one or more of the following:

-

Volume with the following properties:

- Type:

CD-ROMorContainer Disk - Access mode:

ReadWriteOnce - StorageClass replica count:

1(This is not detected in all cases.)

- Type:

-

Host devices passthrough such as

PCIandvGPU -

Node selector that binds the virtual machine to a specific node

-

Scheduling rules that match only one node

The following are examples of rule conditions that are checked at runtime. For more information, see Automatically Applied Affinity Rules.

-

The virtual machine is attached to a cluster network that covers only one node.

-

CPU pinning is enabled on the virtual machine, and CPU Manager is only enabled on one node.

-

The virtual machine has strict anti-affinity rules that prevent it from being co-located with certain other virtual machines.

-

To live-migrate the virtual machine, you must first remove non-migratable devices and add schedulable nodes.

Live-Migratable Virtual Machines

Virtual machines that do not have the properties of non-migratable virtual machines can be live-migrated.

How Migration Works

VirtualMachineInstanceMigration Object

When a virtual machine migration action is triggered, a VirtualMachineInstanceMigration object is created to track the state and progress of the operation. The Harvester controller correlates the VirtualMachineInstanceMigration object with the VirtualMachineInstance object by ensuring the instance object's identity is reflected in the migration object.

In the following example, the virtual machine named demo has an associated migration object. The UID of this object is added to the instance object's .status.migrationState.migrationUID property during migration.

$ kubectl get vmi demo -ojsonpath={.status.migrationState.migrationUID}

1d6d7273-275d-48e0-bb76-62e240b42aaf

The instance object's name is added to the migration object's .spec.vmiName property.

$ kubectl get vmim demo-6crrk -ojsonpath={.spec.vmiName}

demo

The format of the VirtualMachineInstanceMigration object's name varies depending on whether the migration is manually or automatically triggered.

When a migration is triggered from the Migrate menu item, the VirtualMachineInstanceMigration object's name is prefixed with the virtual

machine's name and a random string (for example, vm1-a3d1f).

When a migration is triggered automatically, the VirtualMachineInstanceMigration object's name is prefixed with kubevirt-evacuation- and a random string (for example, kubevirt-evacuation-9c485).

The Harvester UI does not specify the source of the migration. You must check the name of VirtualMachineInstanceMigration object to retrieve this information.

CPU Model Matching

Each node has multiple CPU models that are labeled with different keys.

- Primary CPU model:

host-model-cpu.node.kubevirt.io/{cpu-model} - Supported CPU models:

cpu-model.node.kubevirt.io/{cpu-model} - Supported CPU models for migration:

cpu-model-migration.node.kubevirt.io/{cpu-model}

During live migration, the controller checks the value of spec.domain.cpu.model in the VirtualMachineInstance (VMI) CR, which is derived from spec.template.spec.domain.cpu.model in the VirtualMachine (VM) CR. If the value of spec.template.spec.domain.cpu.model is not set, the controller uses the default value host-model.

When host-model is used, the process fetches the value of the primary CPU model and fills spec.NodeSelectors of the newly created pod with the label cpu-model-migration.node.kubevirt.io/{cpu-model}.

Alternatively, you can customize the CPU model in spec.domain.cpu.model. For example, if the CPU model is XYZ, the process fills spec.NodeSelectors of the newly created pod with the label cpu-model.node.kubevirt.io/XYZ.

However, host-model only allows migration of the VM to a node with same CPU model. For more information, see Limitations.

Starting a Migration

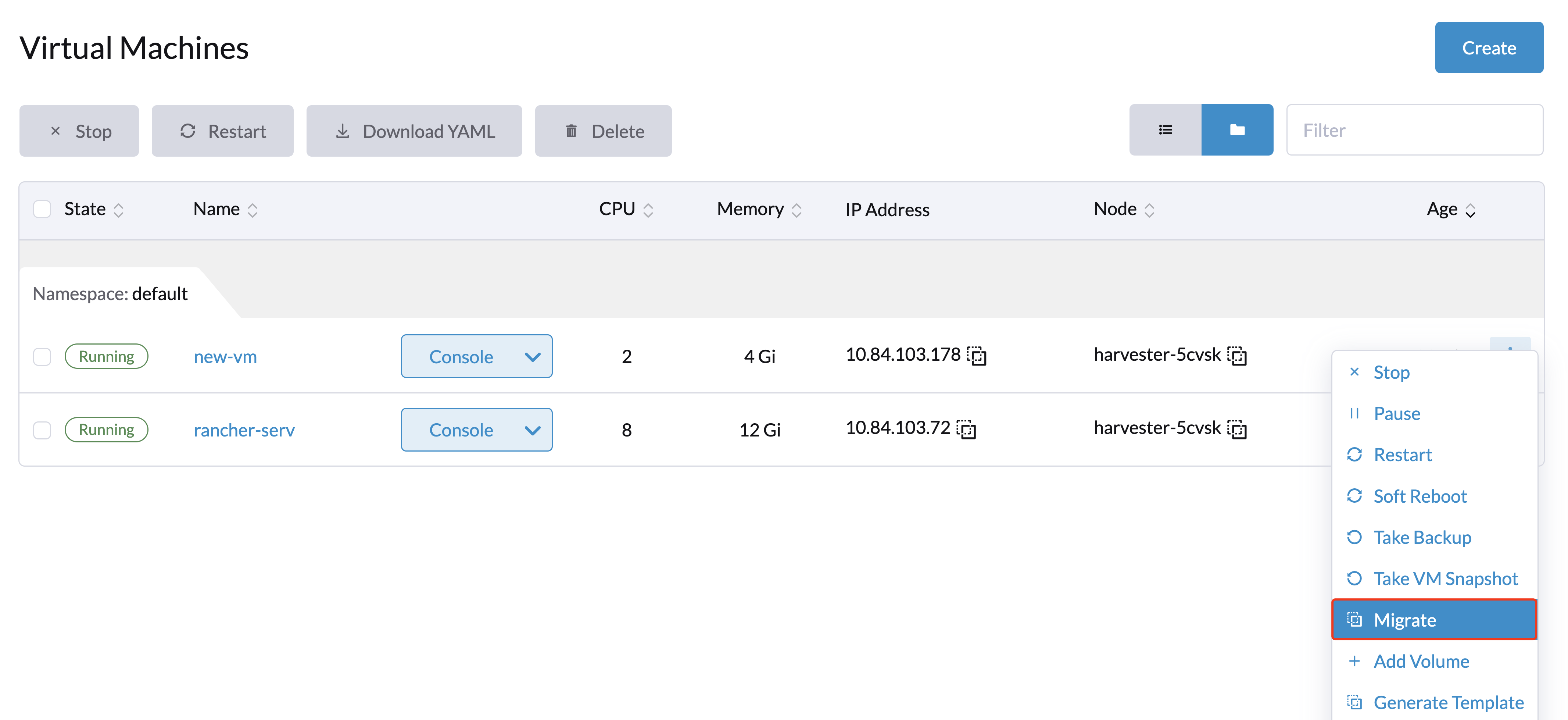

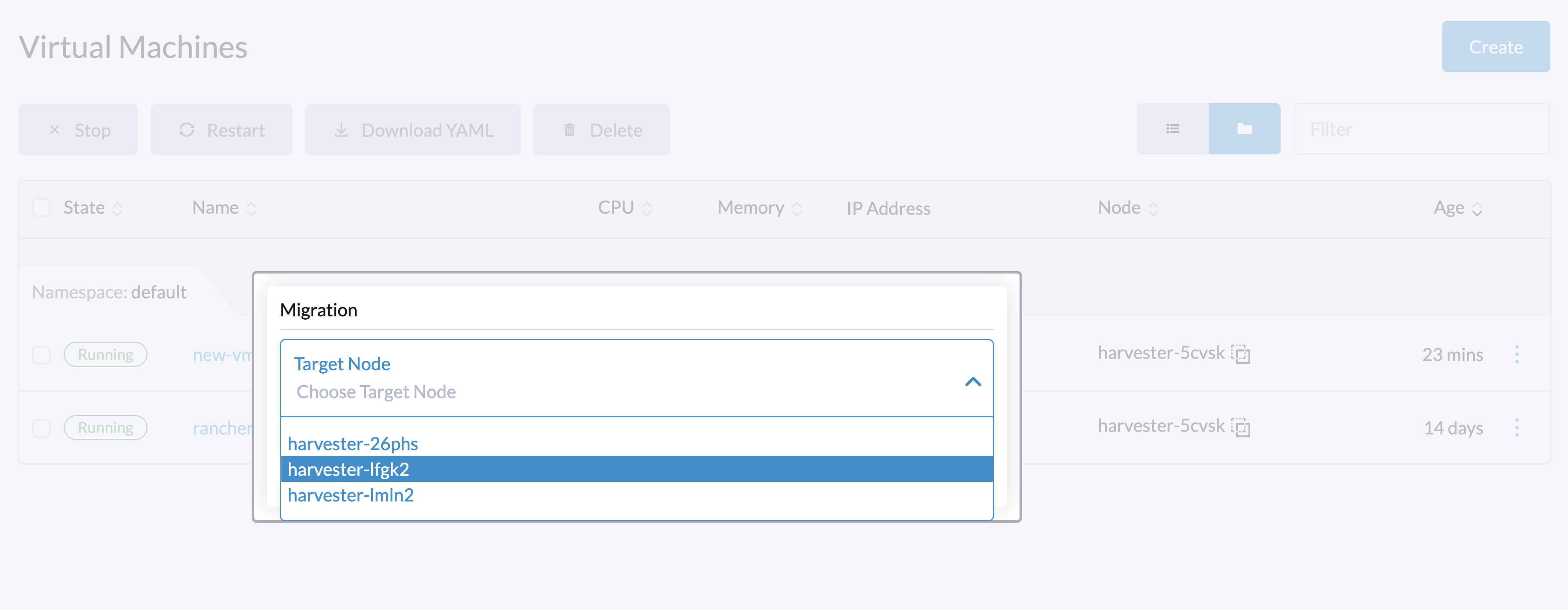

- Go to the Virtual Machines page.

- Find the virtual machine that you want to migrate and select ⋮ > Migrate.

- Choose the node to which you want to migrate the virtual machine. Click Apply.

The Migrate menu option is not available in the following situations:

- The cluster has only one node.

- The virtual machine is non-migratable.

- The virtual machine already has a running or pending migration process.

Aborting a Migration

- Go to the Virtual Machines page.

- Find the virtual machine in migrating status that you want to abort. Select ⋮ > Abort Migration.

The Abort Migration menu item is available when the virtual machine already has a running or pending migration process.

Do not use this UI feature if the migration process was created using batch migration. For more information, see VirtualMachineInstanceMigration Object.

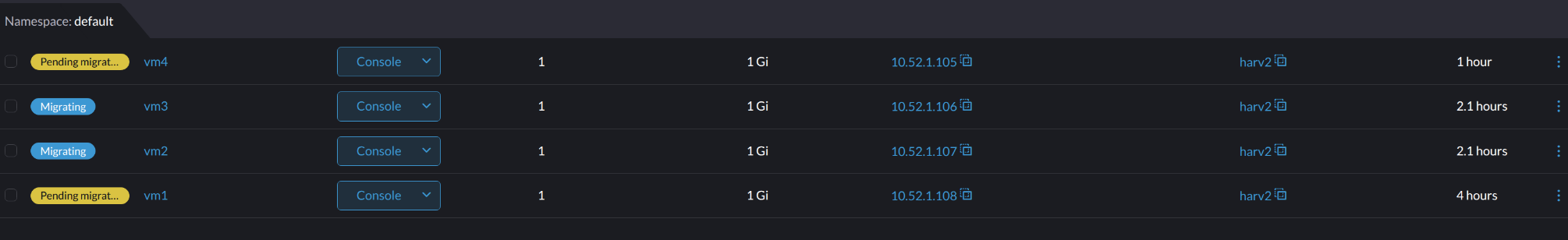

Automatically Triggered Batch Migration

Harvester upgrades and node maintenance both benefit from live migration. The underlying process, which is called batch migration, is slightly different from the one described in Starting a Migration. This process involves the following steps:

-

The controller watches a dedicated taint on the node object.

-

The controller creates a

VirtualMachineInstanceMigrationobject for each live-migratable virtual machine on the current node. -

The migrations are queued, scheduled internally, and processed in batches. The Harvester UI shows the statuses Pending migration and Migrating to indicate progress.

-

The controller monitors the processing and waits until all are completed or have timed out.

Migration Timeouts

Completion Timeout

The live migration process will copy virtual machine memory pages and disk blocks to the destination. In some cases, the virtual machine can write to different memory pages or disk blocks at a higher rate than these can be copied. As a result, the migration process is prevented from being completed in a reasonable amount of time.

Live migration will be aborted if it exceeds the completion timeout of 800s per GiB of data. For example, a virtual machine with 8 GiB of memory will time out after 6400 seconds.

Progress Timeout

Live migration will also be aborted when copying memory doesn't make any progress in 150s.

Monitoring

For information about viewing real-time metrics, see Live Migration Status and Metrics

Limitations

CPU Models

host-model only allows migration of the VM to a node with same CPU model. However, specifying a CPU model is not always required. When no CPU model is specified, you must shut down the VM, assign a CPU model that is supported by all nodes, and then restart the VM.

Example:

- A node:

host-model-cpu.node.kubevirt.io/XYZcpu-model-migration.node.kubevirt.io/XYZcpu-model.node.kubevirt.io/123 - B node:

host-model-cpu.node.kubevirt.io/ABCcpu-model-migration.node.kubevirt.io/ABCcpu-model.node.kubevirt.io/123

Migrating a VM with host-model is not possible because the values of host-model-cpu.node.kubevirt.io are not identical. However, both nodes support the 123 CPU model, so you can migrate any VM with the 123 CPU model using either of the following methods:

- Cluster level: Run

kubectl edit kubevirts.kubevirt.io -n harvester-systemand addspec.configuration.cpuModel: "123". This change also affects newly created VMs. - Individual VMs: Modify the VM configuration to include

spec.template.spec.domain.cpu.model: "123".

Both methods require you to restart the VMs. If you are certain that all nodes in the cluster support a specific CPU model, you can define this at the cluster level before creating any VMs. In doing so, you eliminate the need to restart the VMs (to assign the CPU model) during live migration.

Network Outages

Live migration is highly sensitive to network outages. Any interruption to the network connection between the source and target nodes during migration can have a variety of outcomes.

mgmt Network Outages

Live migration via mgmt (the built-in cluster network) relies on the availability of the management interfaces on the source and target nodes. mgmt network outages are considered critical because they not only disrupt the migration process but also affect overall node management.

VM Migration Network Outages

A VM migration network isolates migration traffic from other network activities. While this setup improves migration performance and reliability, especially in environments with high network traffic, it also makes the migration process dependent on the availability of that specific network.

An outage on the VM migration network can affect the migration process in the following ways:

- Brief interruption: The migration process abruptly stops. Once connectivity is restored, the process resumes and can be completed successfully, albeit with a delay.

- Extended outage: The migration operation times out and fails. The source virtual machine continues to run normally on the source node.

The migration process runs in peer-to-peer mode, which means that the libvirt daemon (libvirtd) on the source node controls the migration by calling the destination daemon directly. In addition, a built-in keepalive mechanism ensures that the client connection remains active during the migration process. If the connection remains inactive for a specific period, it is closed, and the migration process is aborted.

By default, the keepalive interval is set to 5 seconds, and the retry count is set to 5. Given these default values, the migration process is aborted if the connection is inactive for 30 seconds. However, the migration may fail earlier or later, depending on the actual cluster conditions.