Upgrading Harvester

Upgrade support matrix

The following table shows the upgrade path of all supported versions.

| Upgrade from version | Supported new version(s) |

|---|---|

| v1.4.1/v1.4.2 | v1.4.3 |

| v1.4.1 | v1.4.2 |

| v1.4.0 | v1.4.1 |

| v1.3.2 | v1.4.0 |

| v1.3.1 | v1.3.2 |

| v1.2.2/v1.3.0 | v1.3.1 |

| v1.2.1 | v1.2.2 |

| v1.1.2/v1.1.3/v1.2.0 | v1.2.1 |

Rancher upgrade

If you are using Rancher to manage your Harvester cluster, we recommend upgrading your Rancher server first. For more information, please refer to the Rancher upgrade guide.

For the Harvester & Rancher support matrix, please visit our website here.

- Upgrading Rancher will not automatically upgrade your Harvester cluster. You still need to upgrade your Harvester cluster after upgrading Rancher.

- Upgrading Rancher will not bring your Harvester cluster down. You can still access your Harvester cluster using its virtual IP.

Before starting an upgrade

Check out the available upgrade-config setting to tweak the upgrade strategies and behaviors that best suit your cluster environment.

Start an upgrade

- Before you upgrade your Harvester cluster, we highly recommend:

- Back up your VMs if needed.

- Do not operate the cluster during an upgrade. For example, creating new VMs, uploading new images, etc.

- Make sure your hardware meets the preferred hardware requirements. This is due to there will be intermediate resources consumed by an upgrade.

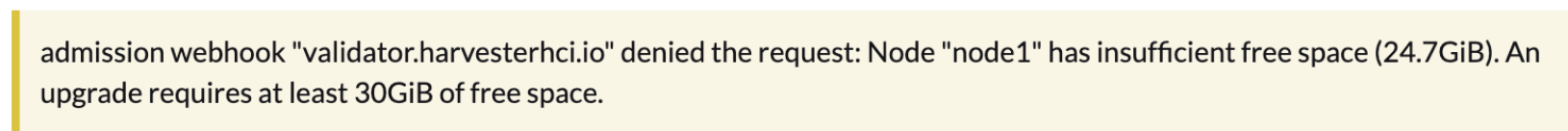

- Make sure each node has at least 30 GiB of free system partition space (

df -h /usr/local/). If any node in the cluster has less than 30 GiB of free system partition space, the upgrade will be denied. Check free system partition space requirement for more information. - Run the pre-check script on a Harvester control-plane node. Please pick a script according to your cluster's version: https://github.com/harvester/upgrade-helpers/tree/main/pre-check.

- A number of one-off privileged pods will be created in the

harvester-systemandcattle-systemnamespaces to perform host-level upgrade operations. If pod security admission is enabled, adjust these policies to allow these pods to run.

-

Make sure all nodes' times are in sync. Using an NTP server to synchronize time is recommended. If an NTP server is not configured during the installation, you can manually add an NTP server on each node:

$ sudo -i

# Add time servers

$ vim /etc/systemd/timesyncd.conf

[ntp]

NTP=0.pool.ntp.org

# Enable and start the systemd-timesyncd

$ timedatectl set-ntp true

# Check status

$ sudo timedatectl status

- NICs that connect to a PCI bridge might be renamed after an upgrade. Please check the knowledge base article for further information.

-

Make sure to read the Warning paragraph at the top of this document first.

-

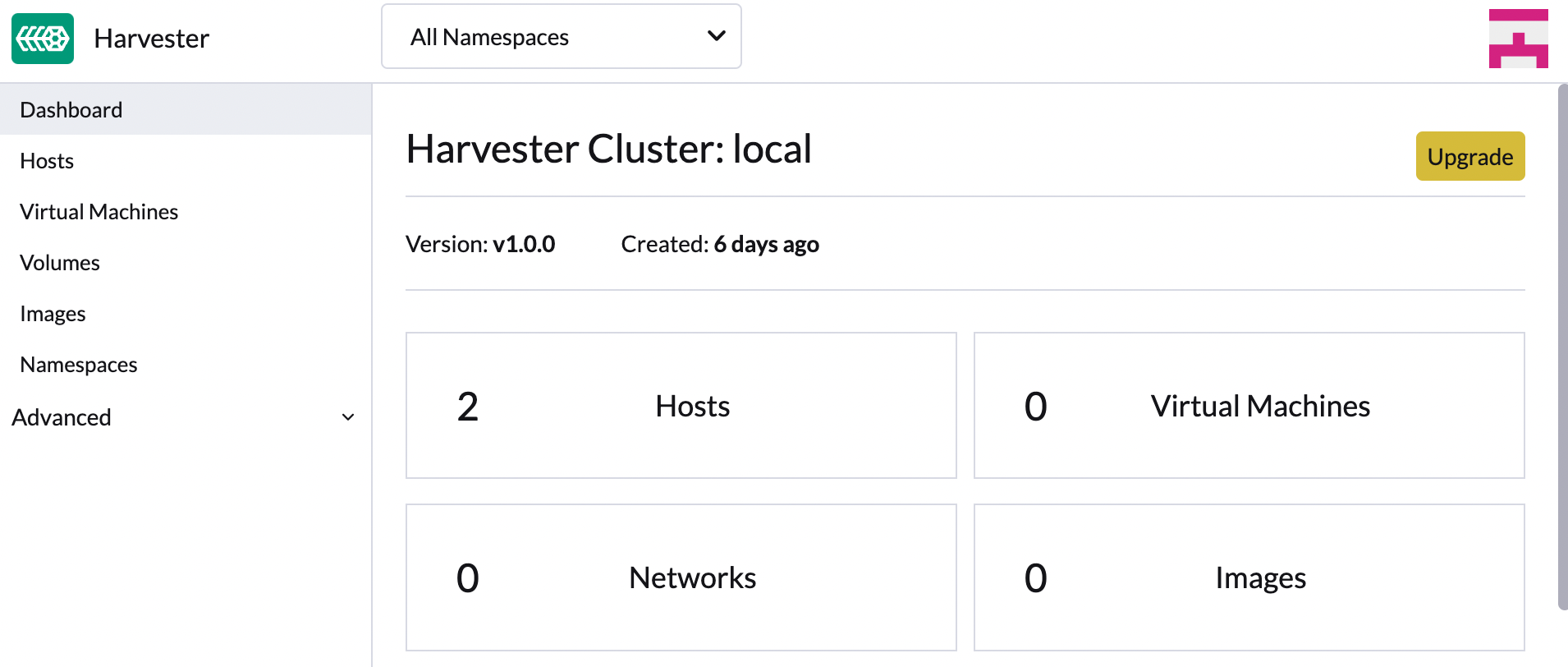

Harvester checks if there are new upgradable versions periodically. If there are new versions, an upgrade button shows up on the Dashboard page.

- If the cluster is in an air-gapped environment, please see Prepare an air-gapped upgrade section first. You can also speed up the ISO download by using the approach in that section.

-

Navigate to Harvester GUI and click the upgrade button on the Dashboard page.

-

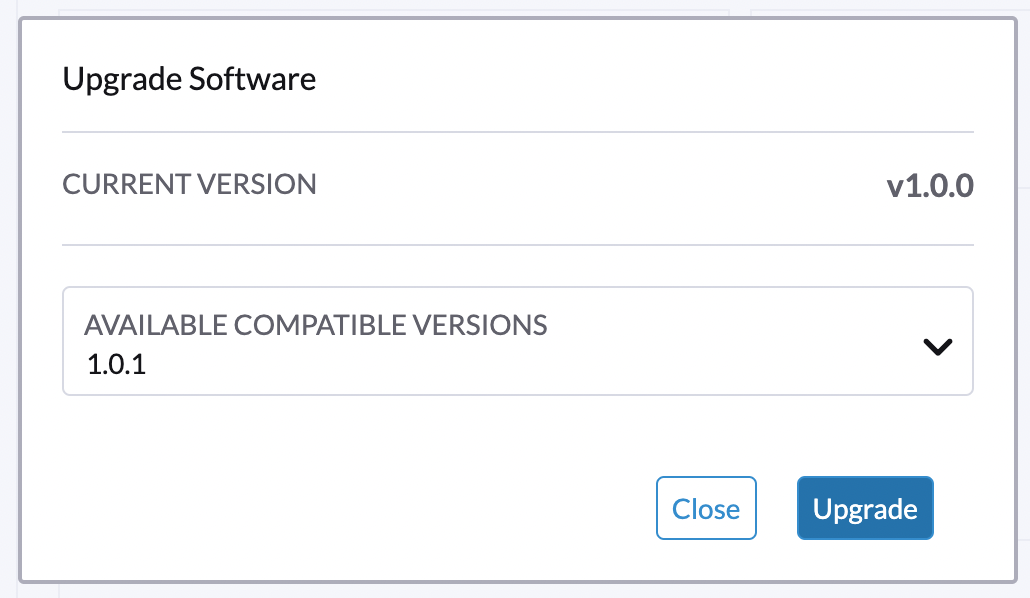

Select a version to start upgrading.

-

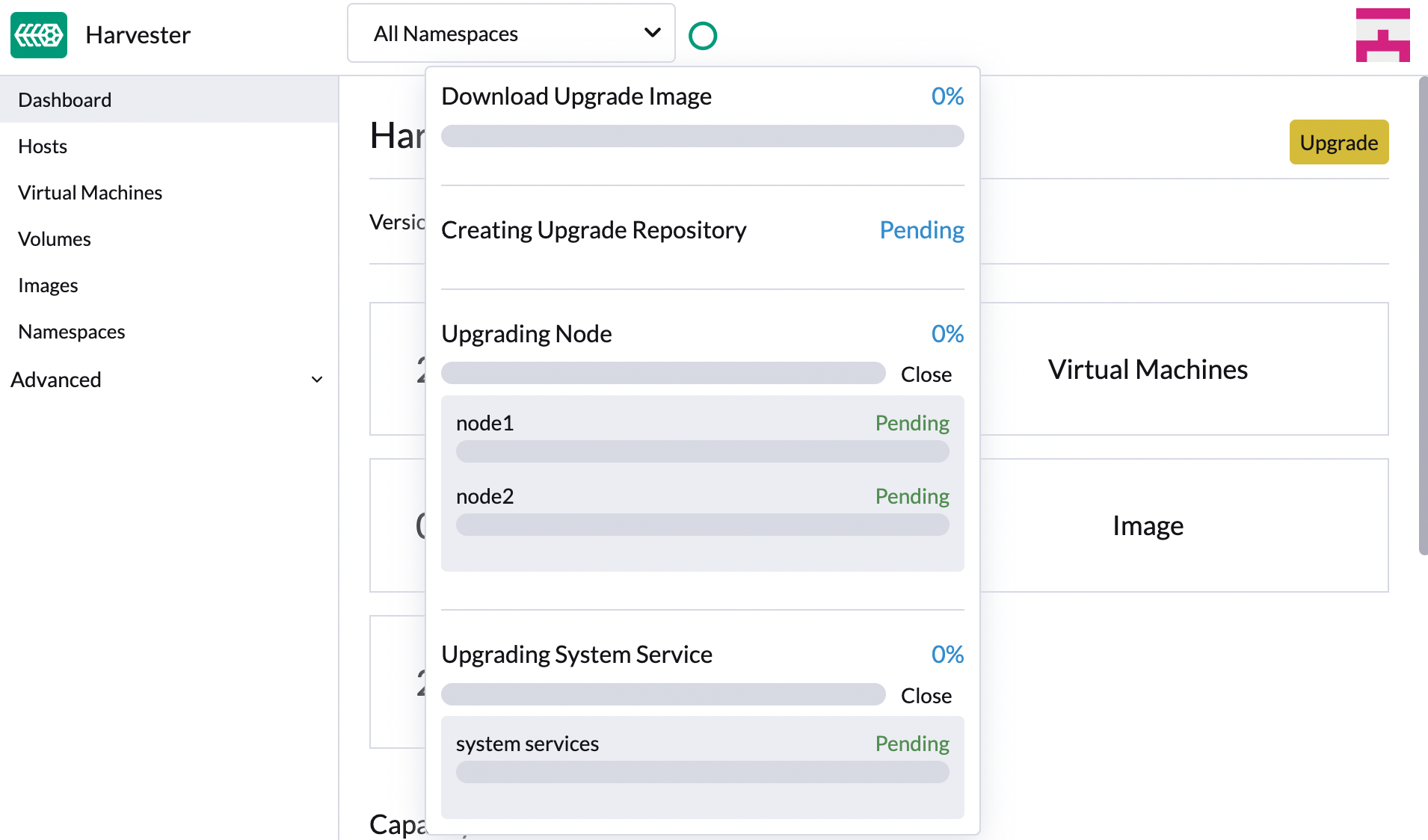

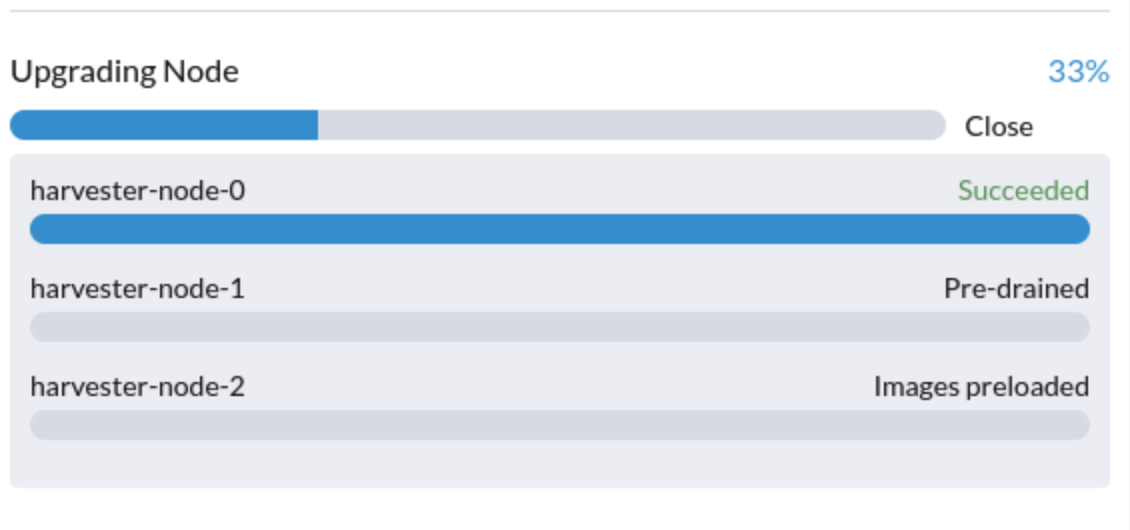

Click the circle on the top to display the upgrade progress.

Prepare an air-gapped upgrade

Make sure to check Upgrade support matrix section first about upgradable versions.

-

Download a Harvester ISO file from release pages.

-

Save the ISO to a local HTTP server. Assume the file is hosted at

http://10.10.0.1/harvester.iso. -

Download the version file from release pages, for example,

https://releases.rancher.com/harvester/{version}/version.yaml-

Replace

isoURLvalue in theversion.yamlfile:apiVersion: harvesterhci.io/v1beta1

kind: Version

metadata:

name: v1.0.2

namespace: harvester-system

spec:

isoChecksum: <SHA-512 checksum of the ISO>

isoURL: http://10.10.0.1/harvester.iso # change to local ISO URL

releaseDate: '20220512' -

Assume the file is hosted at

http://10.10.0.1/version.yaml.

-

-

Log in to one of your control plane nodes.

-

Become root and create a version:

rancher@node1:~> sudo -i

rancher@node1:~> kubectl create -f http://10.10.0.1/version.yaml -

An upgrade button should show up on the Harvester GUI Dashboard page.

Free system partition space requirement

Available as of v1.2.0

The minimum free system partition space requirement in Harvester v1.2.0 is 30 GiB, which will be revised in each release.

Harvester will check the amount of free system partition space on each node when you select Upgrade. If any node does not meet the requirement, the upgrade will be denied as follows

If some nodes do not have enough free system partition space, but you still want to try upgrading, you can customize the upgrade by updating the harvesterhci.io/minFreeDiskSpaceGB annotation of Version object.

apiVersion: harvesterhci.io/v1beta1

kind: Version

metadata:

annotations:

harvesterhci.io/minFreeDiskSpaceGB: "30" # the value is pre-defined and may be customized

name: 1.2.0

namespace: harvester-system

spec:

isoChecksum: <SHA-512 checksum of the ISO>

isoURL: http://192.168.0.181:8000/harvester-master-amd64.iso

minUpgradableVersion: 1.1.2

releaseDate: "20230609"

Setting a smaller value than the pre-defined value may cause the upgrade to fail and is not recommended in a production environment.

The following sections describe solutions for issues related to this requirement.

Free System Partition Space Manually

Harvester attempts to remove unnecessary container images after an upgrade is completed. However, this automatic image cleanup may not be performed for various reasons. You can use this script to manually remove images. For more information, see issue #6620.

Set Up a Private Container Registry and Skip Image Preloading

The system partition might still lack free space even after you remove images. To address this, set up a private container registry for both current and new images, and configure the setting upgrade-config with following value:

{"imagePreloadOption":{"strategy":{"type":"skip"}}, "restoreVM": false}

Harvester skips the upgrade image preloading process. When the deployments on the nodes are upgraded, the container runtime loads the images stored in the private container registry.

Do not rely on the public container registry. Note any potential internet service interruptions and how close you are to reaching your Docker Hub rate limit. Failure to download any of the required images may cause the upgrade to fail and may leave the cluster in a middle state.

Longhorn Manager Crashes Due to Backing Image Eviction

When upgrading to Harvester v1.4.x, Longhorn Manager may crash if the EvictionRequested flag is set to true on any node or disk. This issue is caused by a race condition between the deletion of a disk in the backing image spec and the updating of its status.

To prevent the issue from occurring, ensure that the EvictionRequested flag is set to false before you start the upgrade process.

CVE-2025-1974: Re-enable RKE2 ingress-nginx Admission Webhooks

If you have previously disabled the RKE2 ingress-nginx admission webhook due to CVE-2025-1974, you will need to re-enable it after upgrading to Harvester v1.4.3 or later with the following steps:

- Confirm that Harvester is using nginx-ingress v1.12.1 or later.

$ kubectl -n kube-system get po -l"app.kubernetes.io/name=rke2-ingress-nginx" -ojsonpath='{.items[].spec.containers[].image}'

rancher/nginx-ingress-controller:v1.12.1-hardened1

-

Use

kubectl -n kube-system edit helmchartconfig rke2-ingress-nginxto remove the following configurations from the resource..spec.valuesContent.controller.admissionWebhooks.enabled: false.spec.valuesContent.controller.extraArgs.enable-annotation-validation: true

-

The following is an example of what the new

.spec.ValuesContentconfiguration should look like.

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-ingress-nginx

namespace: kube-system

spec:

valuesContent: |-

controller:

admissionWebhooks:

port: 8444

extraArgs:

default-ssl-certificate: cattle-system/tls-rancher-internal

config:

proxy-body-size: "0"

proxy-request-buffering: "off"

publishService:

pathOverride: kube-system/ingress-expose

If the HelmChartConfig resource contains other custom ingress-nginx configuration, you must retain them when editing the resource.

- Exit the

kubectl editcommand execution to save the configuration.

Harvester automatically applies the change once the content is saved.

- Verify that the

rke2-ingress-nginx-admissionwebhook configuration is re-enabled.

$ kubectl get validatingwebhookconfiguration rke2-ingress-nginx-admission

NAME WEBHOOKS AGE

rke2-ingress-nginx-admission 1 6s

- Verify that the ingress-nginx pods are restarted successfully.

kubectl -n kube-system get po -lapp.kubernetes.io/instance=rke2-ingress-nginx

NAME READY STATUS RESTARTS AGE

rke2-ingress-nginx-controller-l2cxz 1/1 Running 0 94s

Upgrade is Stuck in the "Pre-drained" State

The upgrade process may become stuck in the "Pre-drained" state. Kubernetes is supposed to drain the workload on the node, but some factors may cause the process to stall.

A possible cause is processes related to orphan engines of the Longhorn Instance Manager. To determine if this applies to your situation, perform the following steps:

-

Check the name of the

instance-managerpod on the stuck node.Example:

The stuck node is

harvester-node-1, and the name of the Instance Manager pod isinstance-manager-d80e13f520e7b952f4b7593fc1883e2a.$ kubectl get pods -n longhorn-system --field-selector spec.nodeName=harvester-node-1 | grep instance-manager

instance-manager-d80e13f520e7b952f4b7593fc1883e2a 1/1 Running 0 3d8h -

Check the Longhorn Manager logs for informational messages.

Example:

$ kubectl -n longhorn-system logs daemonsets/longhorn-manager

...

time="2025-01-14T00:00:01Z" level=info msg="Node instance-manager-d80e13f520e7b952f4b7593fc1883e2a is marked unschedulable but removing harvester-node-1 PDB is blocked: some volumes are still attached InstanceEngines count 1 pvc-9ae0e9a5-a630-4f0c-98cc-b14893c74f9e-e-0" func="controller.(*InstanceManagerController).syncInstanceManagerPDB" file="instance_manager_controller.go:823" controller=longhorn-instance-manager node=harvester-node-1The

instance-managerpod cannot be drained because of the enginepvc-9ae0e9a5-a630-4f0c-98cc-b14893c74f9e-e-0. -

Check if the engine is still running on the stuck node.

Example:

$ kubectl -n longhorn-system get engines.longhorn.io pvc-9ae0e9a5-a630-4f0c-98cc-b14893c74f9e-e-0 -o jsonpath='{"Current state: "}{.status.currentState}{"\nNode ID: "}{.spec.nodeID}{"\n"}'

Current state: stopped

Node ID:The issue likely exists if the output shows that the engine is not running or even the engine is not found.

-

Check if all volumes are healthy.

kubectl get volumes -n longhorn-system -o yaml | yq '.items[] | select(.status.state == "attached")| .status.robustness'All volumes must be marked

healthy. If this is not the case, please help to report the issue. -

Remove the

instance-managerpod's PodDisruptionBudget (PDB) .Example:

kubectl delete pdb instance-manager-d80e13f520e7b952f4b7593fc1883e2a -n longhorn-system